딥 러닝 관련 글 목차

2000, Jan 01

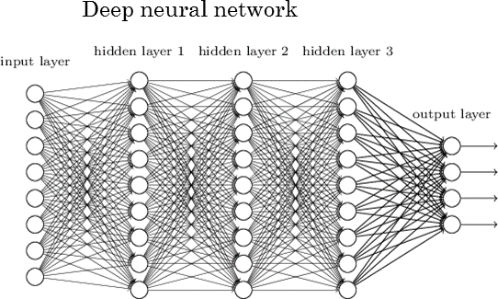

딥 러닝 개념

- Convolution arithmetic

- Activation function 요약

- PReLU (Parametric ReLU)

- ReLU6와 ReLU6를 사용하는 이유

- Gram Matrix Used In Style Transer

- Stride와 Pooling의 비교

- Batch Normalization

- Batch Normalization, Dropout, Pooling 적용 순서

- L1,L2 Regularization

- GAP(Global Average Pooling)이란

- Depthwise separable convolution 연산

- Transposed Convolution을 이용한 Upsampling

- Transposed Convolution과 Checkboard artifact

- Attention 메커니즘의 이해

- Positional Encoding 의 개념과 사용 목적

- Transformer 모델 (Attention is all you need)

- Vision Transformer

- Active Learning과 Learning Loss와 Bayesian Deep Learning을 이용한 Active Learning

- Deep Learning에서의 Uncertainty

- Bayesian Neural Network

- What Uncertainties Do We Need in Bayesian DeepLearning for Computer Vision

- Multi Task Deep Learning 개념 및 실습

- Deformable Convolution 정리

Backbone Network

- Inception

- Xecption

- ResNet

- Dilated ResNet

- SqueezeNet

- SqueezeNext

- MobileNet

- MobileNet v2

- MobileNet v3

- ShuffleNet

- DenseNet

- EfficientNet

- RegNet (Designing Network Design Spaces)

- BiFPN 구조와 코드

경량화 및 효율적 네트워크 관련

- Bag of Tricks for Image Classification with Convolutional Neural Networks

- 경량 딥러닝 기술 동향

- Quantization과 Quantization Aware Training

Loss function 모음

- Negative Log Likelihood Loss

- Huber Loss와 Berhu (Reverse Huber) Loss (A robust hybrid of lasso and ridge regression)

- In Defense of the Unitary Scalarization for Deep Multi-Task Learning

Generative Model 관련

- Probability Model

- (Probabilistic) Discriminative Model

- Basic Bayesian Theory

- Basic Information Theory

- AutoEncoder의 모든것 (1) : Revisit Deep Neural Network

- AutoEncoder의 모든것 (2) : Manifold Learning

- AutoEncoder의 모든것 (3)

- AutoEncoder의 모든것 (4)

- AutoEncoder의 모든것 (5)